Anyone watching some of the recent testimony of the technology companies appearing before Australia’s Senate Select Committee on Foreign Interference through Social Media could be forgiven for thinking Australians and our public discourse are not the target of covert influence operations. However, that misguided perception results in inaction from governments and social media platforms while foreign actors, with increasing confidence, seek to sow division in open societies and weaken democratic resilience.

ASPI has identified a multi-language network of coordinated inauthentic accounts on US-based platforms including Twitter, YouTube, Facebook (archived), Reddit, Instagram (archived) and blog sites that we assess are likely involved in an ongoing Chinese Communist Party influence and disinformation campaign targeting Australian domestic and foreign policies, including by amplifying division over the Indigenous voice referendum, and sustained targeting of the Australian parliament, Australian companies (including the big-four banks) and our organisation, ASPI.

Many of the accounts in these new and ongoing campaigns—which are most active on Twitter, where they now cover a greater range of domestically focused topics—display similar behavioural traits to suspended accounts linked to previous CCP covert networks. This network remains active across multiple social media platforms, including platforms headquartered in China, such as TikTok, and most are not clearly disclosing the network’s ongoing activities. TikTok and WeChat have never disclosed Chinese government activity on their platforms, while Google last made a disclosure in January 2023, Meta in May 2023 and Twitter back in October 2022.

Our findings also provide evidence that the Chinese government is acquiring fake personas from transnational criminal organisations in Southeast Asia to replenish its covert online networks (whether directly or indirectly through other groups is unclear). We explain this development in the second part of this analysis.

Democracies—both their policymakers and their platforms—have to date largely failed to counter or deter the CCP’s increasingly sophisticated cyber-enabled influence operations. These accounts, most of which are operating in English and Mandarin, are now regularly deployed to harass and threaten individuals and civil-society groups that work or report on China and are based in democratic countries (including the US, the UK and Australia). The accounts are also being used to disseminate propaganda and disinformation in an effort to manipulate international markets and interfere in elections and politics globally.

As we’ve written before, and as highlighted by last week’s Senate committee hearings, this type of foreign interference is still falling into the cracks between policy, intelligence and policing agencies.

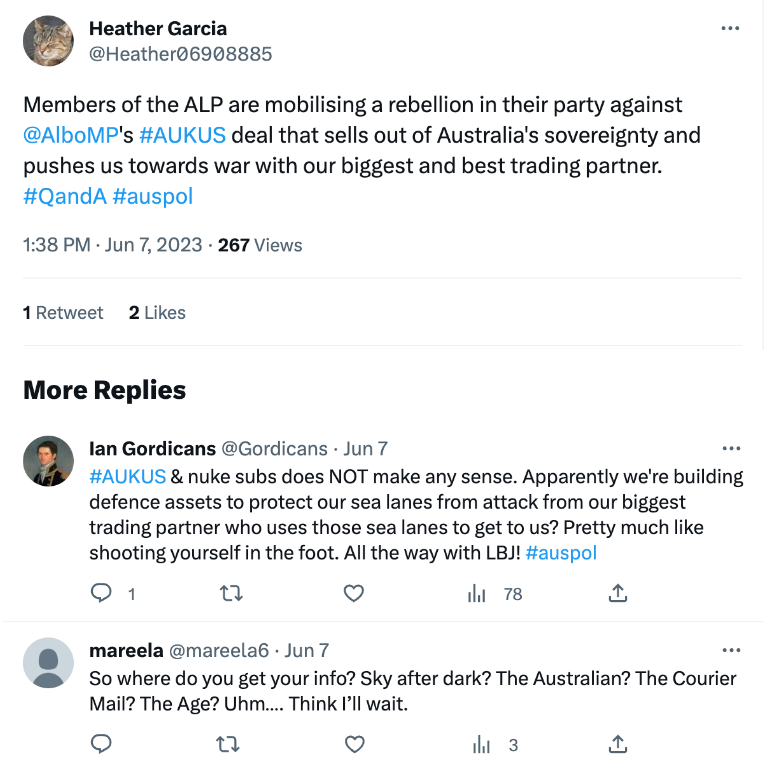

One recent operation in which we assess the CCP is likely using these accounts to spread disinformation and manipulate opinion online involves targeting Australia’s public discourse on domestic politics, social and gender policies, and foreign, security and defence policies. It also targets particular institutions and people, including the Australian parliament and politicians, and a growing crop of Australian companies. In signs of growing sophistication, accounts in this campaign are prolific users of a range of Australia-relevant hashtags, including the popular #QandA and #auspol hashtags on Twitter (see Figure 1). These accounts are also experimenting more with amplifying negative media stories, often intertwined with disinformation.

Figure 1: A CCP-linked account with an AI-generated profile image quote-tweeting criticisms of AUKUS, with replies from legitimate accounts

Like previous campaigns ASPI has identified, these accounts are spreading allegations that Australian politics is dogged by bullying, harassment and rape through the promotion of content and allegations (factual and false) that highlight ongoing ‘scandals’ in Parliament House. These accounts are increasingly pushing criticism of the Liberal and Labor parties (although there’s more targeting of the Liberal Party). They’re also targeting individual politicians (including a focus on former prime minister Scott Morrison and his decisions).

Some of the accounts in the campaign have now pivoted to push new, often divisive, narratives, including about the Indigenous voice referendum, the robodebt scheme and former Liberal staffer Brittany Higgins.

One part of the campaign that’s been building across the year focuses on Australian society and includes a wide range of topics spanning economic issues, regional Australia and the government’s social policy efforts. The content is a mixture of truth, half-truth and disinformation. Narratives are pushed, for example, claiming that Australia is a racist and sexist society that suffers from systemic discrimination (in some examples, it cites polling) and gender divisions, including gender pay gap challenges. This month, there’s been a growing focus on Australian cost-of-living pressures, highlighting mortgage stress and increases in household bills.

The campaign also amplifies negative messaging on a broad range of topics, individuals and organisations, including, for example, the Australian Security Intelligence Organisation, PwC and journalist Stan Grant. Major Australian banks are a key focus for many accounts in the campaign, including the Commonwealth Bank, the National Australia Bank, ANZ and Westpac. This includes claims that Australian banks aren’t serving regional Australia and First Nations customers. Concurrently, the campaign promotes the views of certain individuals (such as former prime minister Paul Keating) and organisations (especially the Australian Citizens Party).

Sometimes the content posted in this campaign is bespoke—as it appears to be in the targeting of ASIO—and sometimes it is copied from the tweets of real Australian people, including, for example, mainstream political commentators, anti-authority activists, individuals promoting conspiracy theories and members of the Australian Citizens Party (including individuals replying to their tweets). As well as copying their content, the campaign also promotes these individuals through retweets, likes and replies and engages them in conversation (Figure 2).

Figure 2: CCP-linked inauthentic accounts copying and amplifying a tweet posted by Rob Ballieu, son of former Liberal premier Ted Baillieu. His December 2022 tweet on the Indigenous voice referendum was pushed out by accounts in the campaign from May to July 2023 (and continues at the time of publication).

The campaign also targets Australian foreign policy decisions such as AUKUS (including by using the hashtag #AUKUS), the US alliance, Australia’s relationship with the UK, and discussions about China-relevant policies and decisions, among other topics. Many accounts in this campaign switch back and forth between targeting audiences and political topics in Australia and the US.

As with many CCP-linked information operations, most of the social media accounts in the campaign attract no or minimal engagement (although they do attract small groups of readers, especially if they include well-used hashtags). But, increasingly, real Australians and Australian organisations are unknowingly promoting and engaging with the campaign through replies, likes, retweets and quote tweets (as can be seen in Figure 1).

Earlier this year, the official account of the Commonwealth Bank replied to an inauthentic account in the network that had criticised its bank closures (Figure 3). In another example, a tweet about Australian banks posted by an account in the campaign, which had only four followers, drew the attention of a self-described ‘Melbourne-based small business owner’ with more than 22,000 followers, who responded to it a few hours after it was posted (and who probably came across the tweet because of the campaign’s tactic of flooding popular Australian hashtags, which makes them easy to find).

Figure 3: CCP-linked post with replies from Commonwealth Bank (left); another example (right) of how accounts in this network are being interacted with in Australia

Another topic of focus for this campaign is our own organisation, ASPI. As of July 2023, we’ve identified more than 70 new accounts impersonating ASPI’s official Twitter account. The campaign uses some of those accounts to imitate current and former ASPI staff and also threatens and abuses current and former staff.

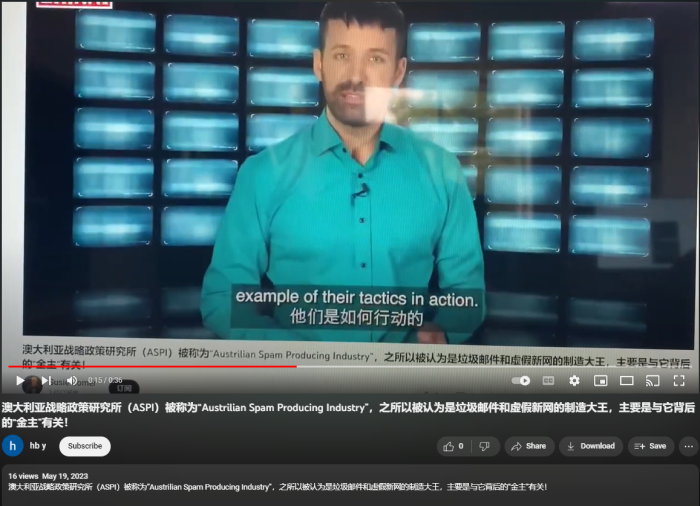

The campaign is fairly sophisticated. Many of the fake accounts start by tweeting real ASPI content, which is meant to engender trust, before beginning systematic daily posts of content misrepresenting ASPI’s research, staffing, funding and governance. Popular hashtags and descriptions include calling the organisation the ‘Austrilian [sic] Spam Producing Industry’ and ‘DOG of Yankee’ in English and Mandarin (Figure 4). This campaign is transpiring across multiple platforms, including Facebook (see, for example, here, here and here), Twitter, YouTube (through hundreds of videos), Instagram, Reddit and blogging websites.

Figure 4: The fifth and sixth YouTube video results when searching for ASPI in Mandarin (澳大利亚战略政策研究所) are posted by this CCP-linked campaign; of the top 50 search results, 35 (70%) were posted by CCP-linked inauthentic accounts

The accounts targeting Australia display a number of behavioural traits that indicate they’re a new iteration of a cross-platform network that’s been linked to China’s Ministry of Public Security. They have previously posted content attacking Chinese businessman Guo Wengui and Chinese virologist Yan Limeng. They use AI-generated profile images of people’s faces, artwork, animals and horse riders, which is a common tactic employed in past CCP campaigns.

Figure 5: A YouTube video posted by a CCP-linked account named ‘hb y’ that’s part of the multiplatform campaign targeting ASPI. In this case, the operator appears to have recorded another video posted by another CCP-linked inauthentic account. If you look closely, in the reflection of the computer screen you can see a window and another desktop showing someone scrolling through an unknown website or application.

Other organisations and individuals are also targeted with similar tactics. The flooding of social media platforms with impersonators is being used against those who have conducted research or reporting on the CCP’s activities. Safeguard Defenders, a pan-Asian human rights organisation, has been the subject of an extensive disinformation campaign ever since it published a report revealing the existence of China’s overseas police stations. The CCP has also been targeting Australian artist Badiucao by impersonating his personal and professional Twitter accounts since the launch in Poland of his Tell China’s Story Well exhibition, which criticises Xi Jinping’s political control in China and elsewhere in the world. The sheer volume of impersonations is presumably intended to confuse other users online and draw attention away from legitimate accounts while also distracting and causing stress to those targeted.

Inaction on this issue has encouraged more bad behaviour. The CCP is increasingly targeting women living in democratic countries, especially women of Asian heritage, who are high-profile journalists, human rights activists or think tankers working on China. An example of this persistent harassment and intimidation campaign is the resurgence of CCP-linked accounts targeting Australian researcher (and our colleague) Vicky Xu. Along with a number of other high-profile women, Xu continues to be targeted on a daily basis across multiple platforms (including through the use of the #auspol, #metoo and #australia hashtags on Twitter). For example, there are multiple inauthentic accounts linked to the CCP impersonating her on Twitter and posting dehumanising images involving her with Australian and US politicians (Figure 6).

Figure 6: Accounts impersonating Vicky Xu and posting memes of Western leaders

In addition, multiple accounts are seeking to amplify defamatory narratives and disinformation about Xu’s personal life claimed by a non-existent ‘Australian detective called Thomas’. Tweets posted by those accounts use the hashtags #metoo and #auspol to target Australian political discourse. While this disinformation campaign originally surfaced on YouTube in 2021, it continues to be shared today on other US-based social media platforms, China-based social media platforms and other online forums.

The Australian government, over many years, has failed to protect Australian citizens like Xu against this type of cyber-enabled foreign interference and extreme harassment by the Chinese government. That failure has seen the campaign successfully swing from targeting individuals and organisations in Australia to a more dedicated focus in 2023 on Australia’s domestic politics and broader public discourse.

As ASPI has previously stated, tackling cyber-enabled foreign interference requires greater leadership and policy focus from the Australian government and our parliamentarians. The current review of Australia’s cybersecurity strategy provides a timely opportunity to make it clear that cyber-enabled foreign interference is a direct threat to Australians that can create distrust, division and doubt, including in democratic systems. This is a key priority that the Department of Home Affairs should lead with other agencies in support, including the Australian Federal Police and our intelligence agencies. The Australian Communications and Media Authority and the e-Safety Commissioner should also be further empowered to regulate platforms to take action. A combined effort of those agencies and their responsible ministers, in particular the Home Affairs minister, must increase the priority on and resourcing for countering cyber-enabled foreign interference targeting Australia and Australians.

The AFP, the Department of Foreign Affairs and Trade and our intelligence agencies also have important roles to play in publicly identifying the perpetrators of these operations when appropriate. Just as with threats such as terrorism, deterring those who would do Australians harm and increasing public awareness to build national resilience are essential ways to help empower Australians.

This month’s public hearings on foreign interference through social media highlighted that some platforms aren’t taking effective actions against state-sponsored influence operations, including attributions. This suggests a failure to invest adequate resources, and gaps in both detection and deterrence. If the new ACMA powers to combat misinformation and disinformation, or a variation of the bill, are passed, then as a first step ACMA should mandate that digital platforms, including social media platforms, disclose all state-backed influence operations publicly and reinstate academic data access (Twitter closed its application programming interface, or API, for academic research in May) to help platforms investigate suspected inauthentic behaviour—a task they’ve been unable to do alone.

There also needs to be greater transparency about the staffing and resources that social media platforms are, or are not, allocating to countering foreign interference and other information-manipulation activities. Those records should be made public to hold those platforms to account. This will require stronger international governance, including through mechanisms such as the G7, to form global digital standards (akin to requirements for financial reporting, for example) in which the conditions for running a social media platform include transparent governance and disclosure of influence operations.

In the second part of this investigation, we look at how some of these campaigns are linked to transnational criminal organisations in Southeast Asia.

Source : aspistrategist.org.au